Decoding the Future: How Mixture of Experts and NVIDIA Blackwell NVL72 Are Revolutionizing Frontier AI

The relentless pursuit of more intelligent AI models has long been characterized by a singular mantra: bigger is better. For years, we have witnessed an exponential growth in model parameters, leading to increasingly monolithic and compute-hungry architectures. However, a seismic shift is underway, one that promises not just greater intelligence but unprecedented efficiency.

- Mixture-of-Experts (MoE) architecture is now the cornerstone for the top 10 most intelligent open-source AI models, signaling a paradigm shift from dense, monolithic designs.

- NVIDIA's Blackwell NVL72 systems, with their extreme codesign, are delivering a remarkable 10x performance increase for these sophisticated MoE models, fundamentally altering the economics of large-scale AI inference.

Context & Background

The Evolution of AI Architectures: From Dense to Dispersed Intelligence

For a considerable period, the prevailing wisdom in AI development dictated that to achieve superior intelligence, models simply needed to be larger. This approach, centered around "dense" models, engaged all parameters for every single token generation. While undeniably powerful, this strategy became increasingly unsustainable, demanding prodigious amounts of computational power and energy. It presented formidable challenges for scaling and deployment, pushing the limits of existing infrastructure.

The Rise of Mixture-of-Experts (MoE)

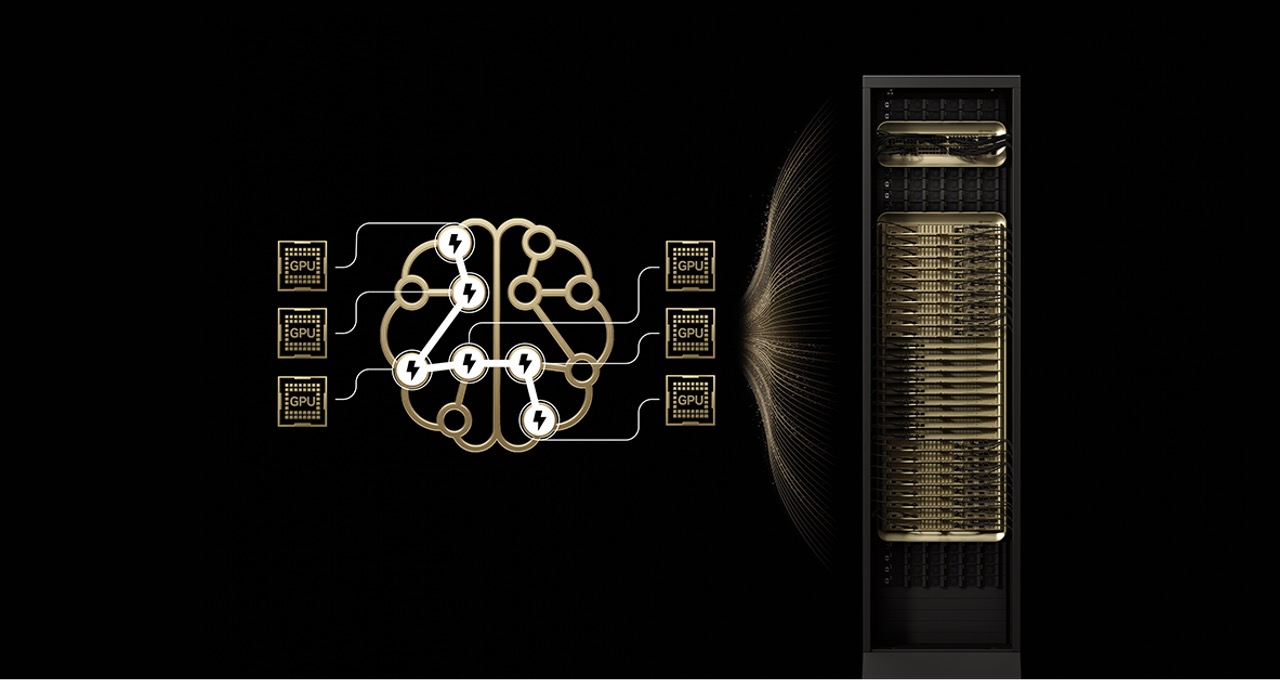

The industry's pivot to the Mixture-of-Experts (MoE) architecture represents a profound conceptual leap, mirroring the efficiency of the human brain. Instead of a single, all-encompassing network, MoE models are composed of numerous specialized "experts." Our understanding is that for any given task or "AI token" – a fundamental unit of processing in AI language models – only the most relevant experts are activated. This selective engagement dramatically reduces the computational load without sacrificing the overall intelligence or adaptability of the model.

The adoption of MoE is not merely a theoretical preference; it is a demonstrated reality. We observe that the top 10 most intelligent open-source models, as ranked on the independent Artificial Analysis (AA) leaderboard, now predominantly leverage MoE architectures. Prominent examples include DeepSeek AI’s DeepSeek-R1, Moonshot AI’s Kimi K2 Thinking, and Mistral AI’s Mistral Large 3. This widespread adoption underscores the clear advantages MoE offers in terms of efficiency and performance per dollar and per watt.

Critical Analysis

Unpacking the MoE Advantage: Efficiency Meets Intelligence

From our perspective, the allure of MoE models lies in their ability to deliver enhanced intelligence without a commensurate increase in computational cost. By selectively engaging only a subset of their parameters—often just tens of billions out of hundreds of billions—for each token, these models achieve a level of efficiency previously unattainable with dense architectures. This design philosophy is particularly compelling for organizations navigating power and cost-constrained data centers, as it promises significantly more intelligence per unit of energy and capital invested. We believe this represents a crucial step towards democratizing access to frontier AI capabilities, making advanced models more feasible for a broader range of applications.

The rapid embrace of MoE is evident in its market penetration, with over 60% of open-source AI model releases this year incorporating this architecture. This trend has not just been about efficiency; it has directly fueled a nearly 70x increase in model intelligence since early 2023, pushing the very boundaries of what AI can achieve. This rapid advancement signals a maturation of AI development, moving beyond brute-force scaling to more elegant and biologically inspired solutions.

NVIDIA's Blackwell NVL72: The Catalyst for MoE Scalability

Despite its inherent advantages, scaling MoE models in production environments has presented significant hurdles. The sheer size and complexity of these frontier models typically necessitate distributing "experts" across multiple GPUs, a technique known as expert parallelism. Previous platforms, such as the NVIDIA HGX H200, encountered bottlenecks primarily due to memory limitations and latency issues. Loading selected expert parameters from high-bandwidth memory for each token created immense pressure on bandwidth, while the all-to-all communication pattern required for experts to exchange information often suffered from higher-latency scale-out networking when spread across more than eight GPUs.

This is where NVIDIA's Blackwell NVL72 system enters the fray, offering what we term "extreme codesign" as a compelling solution. The NVL72 is not just a collection of GPUs; it's a meticulously engineered rack-scale system integrating 72 NVIDIA Blackwell GPUs. These GPUs operate in concert, behaving as a single, unified entity, delivering an astounding 1.4 exaflops of AI performance and boasting 30TB of fast shared memory. The critical innovation here is the NVLink Switch, which connects all 72 GPUs into a massive NVLink interconnect fabric, enabling ultra-low latency communication at 130 TB/s. This architecture directly addresses the scaling bottlenecks by significantly reducing the number of experts per GPU, thereby minimizing memory pressure and freeing up memory to serve more concurrent users and support longer input lengths. Furthermore, the accelerated expert communication over NVLink, coupled with the NVLink Switch's compute capabilities for combining expert information, drastically speeds up the delivery of final answers. This approach exemplifies NVIDIA's strategic vision for AI, as discussed in our article on NVIDIA's Strategic Vision: Catalyzing the Future of AI with Graduate Research Fellowships.

Beyond hardware, NVIDIA's full-stack optimizations are equally crucial. The NVIDIA Dynamo framework orchestrates disaggregated serving, intelligently assigning prefill and decode tasks to different GPUs. This allows decode to leverage extensive expert parallelism while prefill utilizes techniques better suited to its workload. Additionally, the NVFP4 format plays a vital role in maintaining accuracy while further boosting performance and efficiency. Open-source inference frameworks like NVIDIA TensorRT-LLM, SGLang, and vLLM are already supporting these optimizations, with SGLang notably advancing large-scale MoE on GB200 NVL72. This comprehensive approach, encompassing both hardware and software, highlights NVIDIA's commitment to enabling the next generation of AI.

The proof of this integrated approach is unequivocally in the performance numbers. Models like Kimi K2 Thinking, DeepSeek-R1, and Mistral Large 3 are reporting a 10x performance leap on the NVIDIA GB200 NVL72 compared to the prior-generation H200. This is not just a benchmark statistic; it fundamentally alters the economics of AI at scale. As NVIDIA founder and CEO Jensen Huang aptly noted, this translates into 10x the token revenue, a critical factor for power- and cost-constrained data centers. Companies like CoreWeave, DeepL, and Fireworks AI are already leveraging this capability, deploying MoE models with unprecedented efficiency and performance.

What This Means for You

The Economic and Strategic Imperatives of MoE and Blackwell

For enterprises and developers alike, the widespread adoption of Mixture-of-Experts architectures, coupled with the computational prowess of NVIDIA's Blackwell NVL72, signals a critical juncture in AI development. We believe that ignoring this shift would be a strategic misstep. The benefits extend beyond raw speed; they encompass improved cost-efficiency, reduced energy consumption, and the ability to deploy more sophisticated and adaptable AI models. This advancement democratizes access to frontier AI capabilities, making advanced intelligence more attainable for diverse applications, from natural language processing to complex simulations.

Future-Proofing AI with Multimodal and Agentic Systems

Looking ahead, the implications of MoE and the Blackwell NVL72 extend far beyond current large language models. We see a clear trajectory towards the newest generation of multimodal AI models, which will feature specialized components for language, vision, audio, and other modalities. These models will activate only the relevant components for a given task, perfectly mirroring the MoE paradigm. Similarly, in emerging agentic systems, different "agents" will specialize in planning, perception, reasoning, or tool use, with an orchestrator coordinating their outputs for a single outcome. In both scenarios, the core pattern of routing problems to the most relevant experts, then coordinating their outputs, aligns seamlessly with the MoE architecture. This suggests that investments in MoE-optimized infrastructure like the Blackwell NVL72 are not just for today's AI but are crucial for future-proofing against the evolving demands of advanced AI. NVIDIA's roadmap, with the upcoming Vera Rubin architecture, promises to further expand these horizons, ensuring continued advancements in frontier models.

| Pros | Cons |

|---|---|

| Unprecedented Performance: Delivers up to 10x faster inference for leading MoE models like Kimi K2 Thinking and DeepSeek-R1 compared to previous generations. | High Initial Investment: Blackwell NVL72 systems represent a significant capital expenditure, potentially limiting accessibility for smaller entities. |

| Superior Efficiency: Mimics the human brain by activating only relevant "experts," dramatically reducing computational and energy costs per token generated. | Complexity of Deployment: While NVIDIA simplifies scaling, MoE models still inherently possess a higher architectural complexity than dense models. |

| Enhanced Scalability: Extreme codesign with 72 GPUs and NVLink Switch overcomes previous memory and latency bottlenecks, enabling massive expert parallelism. | Potential Vendor Lock-in: Deep integration with NVIDIA's full-stack hardware and software might limit flexibility to switch platforms in the future. |

| Economic Transformation: 10x performance per watt translates to significantly higher token revenue, making large-scale AI more economically viable. | Specialized Infrastructure: Requires specific rack-scale systems and interconnected GPUs, not a simple plug-and-play upgrade for existing data centers. |

| Future-Proofing for Advanced AI: Ideal architecture for emerging multimodal and agentic AI systems, aligning with future trends in AI development. | Limited Open-Source Hardware Equivalents: While MoE models are open-source, achieving optimal performance often necessitates NVIDIA's proprietary hardware. |

We find ourselves at an inflection point where intelligence no longer solely equates to size, but rather to intelligent design and optimized execution. The combination of Mixture-of-Experts architectures and the raw power of NVIDIA's Blackwell NVL72 marks a significant leap forward in the capabilities and accessibility of frontier AI. This synergy is not just about faster computations; it's about fundamentally reshaping the landscape of AI development and deployment.

What are your thoughts on this architectural shift? Do you believe MoE models on specialized hardware like Blackwell NVL72 will truly democratize frontier AI, or will the high entry barrier keep it exclusive? Let us know in the comments below!

Frequently Asked Questions

Analysis and commentary by the NexaSpecs Editorial Team.

Interested in NVIDIA Blackwell NVL72?

Check Price on Amazon →NexaSpecs is an Amazon Associate and earns from qualifying purchases.

📝 Article Summary:

The Mixture-of-Experts (MoE) architecture is rapidly becoming the standard for frontier AI models, offering significant efficiency gains over traditional dense models. NVIDIA's Blackwell NVL72 systems are revolutionizing MoE deployment by delivering a 10x performance leap and overcoming previous scaling bottlenecks.

Keywords:

Words by Chenit Abdel Baset