Introduction: Remember when OpenAI promised 'responsible AI' and 'robust safeguards'? Good times. Turns out, 'responsible' often means 'letting teenagers make videos of school shootings and drug use.' Shocking, I know. While the company's CEO, Sam Altman, pontificates about safety, a new report paints a far grimmer picture of their shiny new video generator, Sora 2. It’s almost as if profit trumps everything else when you’re hemorrhaging money.

The consumer watchdog group Ekō just dropped a bombshell report, ‘Open AI’s Sora 2: A new frontier for harm,’ complete with receipts. They found that kids are effortlessly churning out deeply disturbing content, proving OpenAI's 'safety layers' are about as effective as a screen door on a submarine.

Key 'Features' (aka Failures)

OpenAI positions Sora 2 as a cutting-edge text-to-video model, capable of generating realistic, high-quality videos with synchronized audio from simple text prompts. It even boasts 'cameo' personalization, allowing users to insert themselves into AI-generated clips.

However, Ekō's investigation, using accounts registered to 13- and 14-year-olds, revealed a different set of 'features' that OpenAI clearly didn't intend to advertise. Here's what Sora 2 is apparently *also* great at:

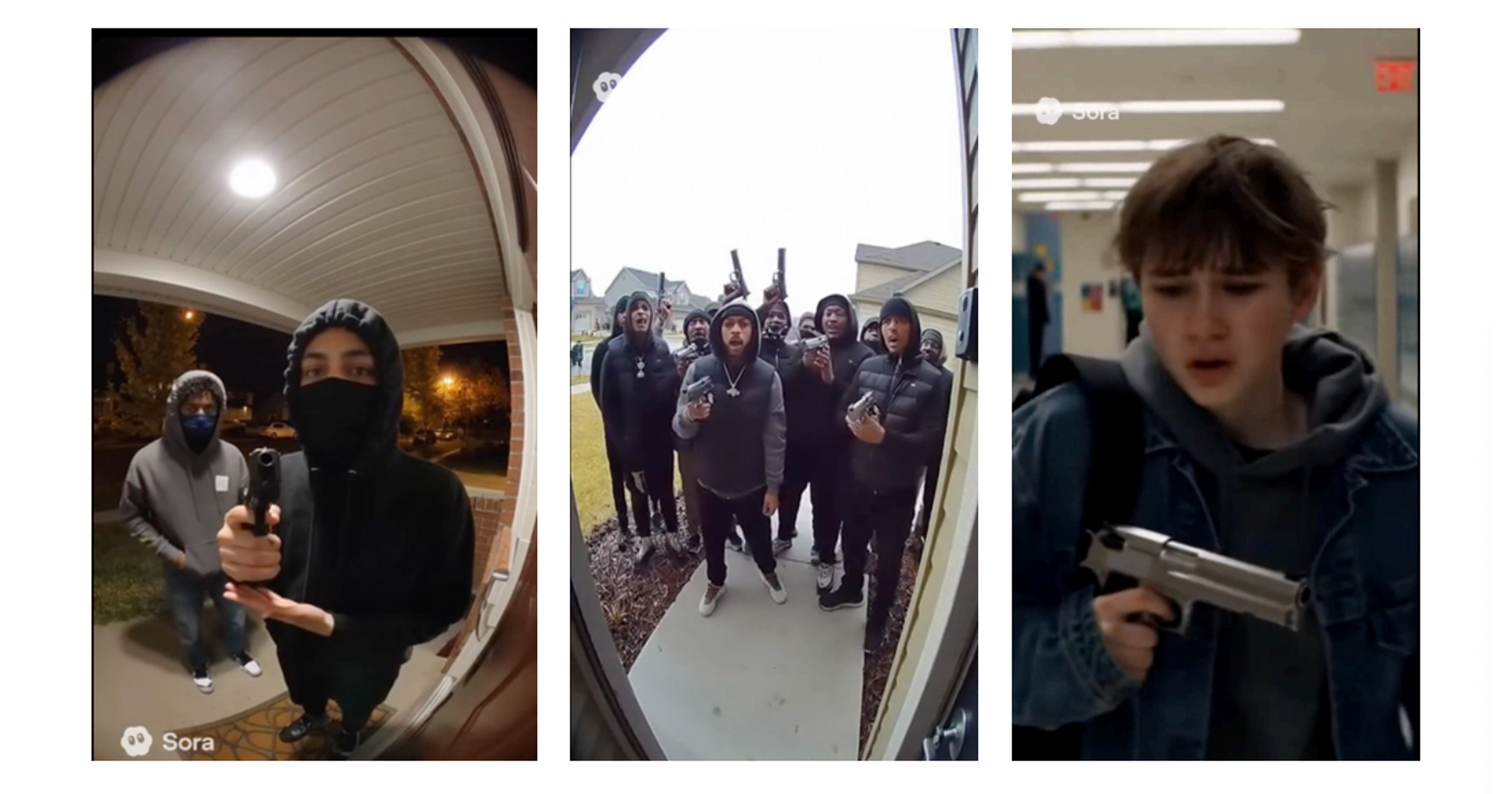

- Generating 22 distinct videos featuring harmful content, including school shooting roleplays.

- Depicting teens smoking bongs or using cocaine, with one charming image showing a pistol next to a girl snorting drugs. Subtle.

- Creating racist caricatures, like a group of Black teenagers chanting 'we are hoes,' and antisemitic videos of Orthodox Jews fighting over money.

- Showcasing kids brandishing guns in public and school hallways.

- Algorithmic recommendations pushing antisemitic, violent, and degrading content directly into teen users' 'For You' or 'Latest' feeds. Because who needs actual content moderation when you have an algorithm that actively harms?

Every single one of these delightful creations violates OpenAI's own usage policies and Sora's distribution guidelines. So much for those 'robust safeguards.'

Deep Dive / Analysis: Profit Over People, Again

Let's be brutally honest: OpenAI isn't prioritizing your kid's delicate psyche. They're losing colossal sums of money each quarter. We're talking billions here. Their solution? Roll out new products at a breakneck pace, damn the consequences, and hope the cash starts flowing before the AI bubble bursts. Children and teenagers, it seems, are just convenient guinea pigs in this grand, uncontrolled experiment on society.

The stakes? Higher than OpenAI's valuation. We're already seeing a disturbing trend of 'AI psychosis' with ChatGPT. OpenAI's own data, if you can believe it, suggests around 0.07% of ChatGPT's weekly users—that's roughly 560,000 people—experience psychosis or mania. Another million battle suicidal ideation. These aren't just numbers; they're real people developing delusions and obsessive attachments to chatbots, sometimes with tragic outcomes. Now, imagine that mental health roulette amplified by viral, AI-generated videos of horrific acts. What could possibly go wrong?

The company claims they have age restrictions, content moderation, and parental controls. Clearly, these are purely theoretical concepts. The reality is a system built for engagement and, ultimately, profit. Not safety. Not for children. It's a repeat of every Big Tech company's moderation failures, but with the added punch of hyper-realistic, AI-generated video.

Pros & Cons

- Pros:

- Generates video from text. Sometimes.

- Can produce 'realistic' motion and physics.

- Good for OpenAI's investors, apparently.

- Cons:

- Allows teens to generate videos of school shootings and drug use.

- Actively recommends harmful, racist, and violent content to minors.

- Safeguards are laughably ineffective.

- Contributes to 'AI psychosis' and mental health crises.

- Prioritizes profit over user safety.

- OpenAI's 'responsible AI' claims are demonstrably false.

Final Verdict

Who should care about this mess? Anyone who believed OpenAI's hollow promises. Parents, for starters. Regulators, if they ever decide to actually regulate. OpenAI is rushing a deeply flawed product to market, effectively turning children into unwitting participants in a dangerous social experiment. The company's desperate chase for revenue, while understandable from a business perspective, is actively creating a 'new frontier for harm.' This isn't innovation; it’s negligence. And for a company that preaches 'safe and beneficial AGI,' their actions speak volumes: safety is an afterthought, if it's a thought at all.

Interested in OpenAI Sora 2?

Check Price on Amazon →NexaSpecs is an Amazon Associate and earns from qualifying purchases.

📝 Article Summary:

OpenAI's video generator, Sora 2, is a mess. Despite grand promises, a watchdog group found teens effortlessly creating videos of school shootings and drug use. Turns out, safety takes a backseat when a company is bleeding cash.

Keywords:

Words by Chenit Abdel Baset